Statistics & Optimization for Trustworthy AI

Our Research

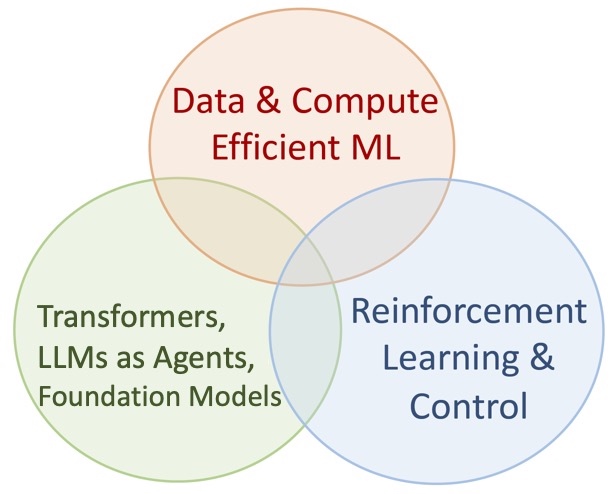

We develop principled and empirically-impactful AI/ML methods

- mathematical foundations of transformers and attention

- trustworthy and efficient language models, LLM systems

- reinforcement learning, control, LLMs as interactive agents

- core optimization and statistical learning theory

News

- I will serve as a Senior Area Chair for NeurIPS 2024.

- New course: Foundations of Large Language Models.

- Link to syllabus (including Piazza and logistics)

- New paper: From Self-Attention to Markov Models, M.E. Ildiz, Y. Huang, Y. Li, A.S. Rawat, S.O.

- Two papers are accepted to AISTATS 2024 (papers upcoming)

- “Mechanics of Next Token Prediction with Self-Attention”, Y. Li, Y. Huang, M.E. Ildiz, A.S. Rawat, S.O.

- “Risk and Emergence of Individual Tasks in Multitask Representations”, M.E. Ildiz, Z. Zhao, S.O.

- Two papers are accepted to AAAI 2024 and one paper is accepted to WACV 2024

- Invited talks at USC, INFORMS, Yale, Google NYC, and Harvard on our works on transformer theory

- Transformers as SVMs and FedYolo will appear in NeurIPS workshops

- Two papers are accepted to NeurIPS 2023

- Grateful for the Adobe Data Science Research award!

- Our new works develop the optimization foundations of Transformers via SVM connection

- Two papers appeared at ICML 2023

- Two papers appeared at AAAI 2023: Provable Pathways and Long Horizon Bandits

- Papers to appear at AutoML 2023 and ICASSP 2023.

- One paper is accepted to L4DC 2023 as oral presentation.

MENU

MENU